Three CDI and coding related thoughts …

- A dark vision of ChatGPT.

In case you’ve been living under a rock, there’s a new AI-powered program called ChatGPT that can generate readable (perhaps good? At least usually quite understandable) copy from prompts entered by a human user.

This development has generated angst and anxiety, but also optimism. For example, physicians might use ChatGPT to generate discharge summaries, an improvement on copy/paste while reducing administrative burden.

But if your mind trends to dark, as mine sometimes does, has anyone thought about what this tool could do in the hands of a rapacious auditor? Imagine a ChatGPT trained on hundreds of thousands of denials letters and an auditor prompting “write me a sepsis denial based on sepsis-3 criteria” spewing these out. That could quickly overwhelm hospitals, as has occurred for some science fiction magazines (a handful of SF editors have had to shut down submissions after receiving 1000s of AI-generated stories).

Scary thought. If you’re a RAC auditor please don’t do this.

- Problems cleaning up the problem list.

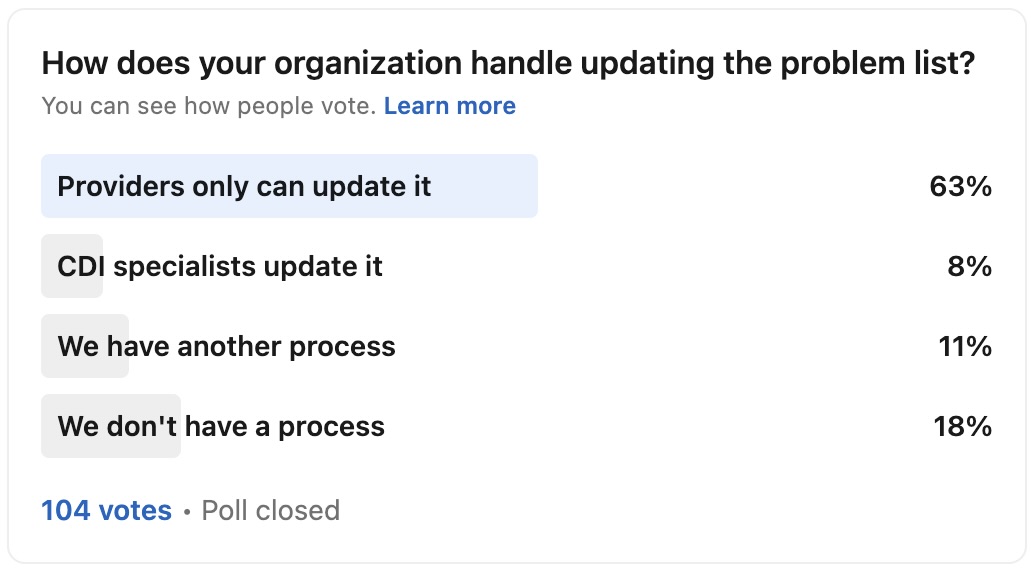

My problem list poll recently closed. You can view the final numbers in the attached image.

Biggest takeaway: Most organizations don’t seem to trust their CDI professionals to update the problem list (only 8% permit this practice. 63% leave it up to the provider).

Problem lists are often so useless that some organizations ignore them during their CDI reviews, especially inpatient. They appear to have far more use and utility for prospective reviews, but imagine if they were clean and accurate.

My suggestion: CDI needs to own problem list maintenance. Step into it, make a difference. I’m a fan of the Sally Hart, MS, BSN, RN, CCDS, CPHQ, CRCR comment on my original LinkedIn post: “Have a policy and process built on compliance and top of license skills that leverage your highly trained and reliable CDI experts. Validate changes with providers – stop expecting them to do all the housekeeping. Imagine having complete and accurate problem lists across the care continuum!”

- The RAC program has issues.

Shout-out to Ronald Hirsch, MD, FACP, CHCQM, CHRI for his recent RAC Monitor piece “RAC Review Reveals Glaring Shortcomings.” Link to the article below, in case you missed it. Apparently, CMS recently (and quietly) posted a review of the RAC program’s 2019 audit activity on its website. Although the program generated significant $ for the Medicare trust fund, the accuracy rates weren’t pretty for RACs. 56% of denials were reversed at level 1, and 33% of denials taken to qualified independent contractors at level 2 were overruled in favor of the provider. I shudder to think of the hours spent in these activities.

This is what a community should do, keep each other appraised of underreported developments, niche news, interesting findings, etc. Nice find Dr. Hirsch!

RAC Monitor article: https://racmonitor.medlearn.com/rac-review-reveals-glaring-shortcomings/

Related News & Insights

Code Red: Aligning Risk Adjustment with CMS’s New Audit Mandate

Listen to the episode here. For this week’s episode I’m bringing you something a little different,…

Code Red: CMS announces massive audit expansion of Medicare Advantage, raising risk adjustment coding questions

By Brian Murphy For a while I felt like this was coming to a head. As far…